4. What is measured and how

Different types of testing and research

A distinction can be made between testing that is carried out as part of the design process and testing on finished products.

-

Formative evaluations, i.e. before finalising the design, can inform design decisions by either detecting problems with some aspects of a single design (e.g. type is too small) or indicating which of two or more versions is easier to read.

This form of testing is described as diagnostic testing when pinpointing specific problems, and is ideally used as part of an iterative design process. Having detected a problem, this is resolved and then re-tested. -

User testing or user research compares different versions and this may be carried out as a formative evaluation, to determine which version to develop further.

-

If user testing is carried out as a summative evaluation, i.e. testing the final product, the results may provide recommendations for the design of future similar products. However, this practical guidance will be limited if it is not possible to determine why one version was better than another.

-

Research studies make comparisons between different versions whilst controlling how they vary. From these results, it should be possible to say, for example, which typographic variable affects speed of reading. The research is therefore generalizable to other design situations and can be considered robust research, if carried out appropriately.

Question: Consider whether you have used a formative evaluation as part of your design process. For example, have you asked colleagues or friends for feedback about aspects of your design?

Challenges

Key criteria

The methods used for the first three types of testing above can be less formal than those used for research studies. In some circumstances, it may be unnecessary to meet all of the criteria listed below, or they may be less relevant. Nevertheless, it is helpful to know what are the main challenges to carrying out robust research that will be of value and relevant to both researchers and designers.

Although the three criteria are listed separately, they do interrelate. Finding a solution to one challenge may conflict with another so a judgement must be made as to what to prioritise.

The key criteria in designing a study are:

-

Sensitivity: finding a method to measure performance of some aspect of reading that is sensitive enough to pick up differences when typography is varied.

-

Reliability: ensuring that the results you get are repeatable. If you were to do the same study again, would you get the same outcome? One solution is to increase the amount of data collected. You can do this by using a sufficiently large number of participants in the study and, where practical, giving participants multiple examples of each condition of the experiment. These requirements present their own challenges which are to find enough participants and to fit the experiment into a reasonable length of time.

-

Validity: determining that the study measures what it is intended to measure. Of most relevance to legibility research, and the designer’s perspective, is ecological validity, a form of ‘external validity’. This describes the extent to which a study approximates typical conditions and is also referred to as ‘face validity’. In our context, this can mean a natural reading situation and suitable reading material. Another form of validity is ‘internal validity’ which describes the relationship between the outcomes of the study and the object of study. This is explained further below.

Reading conditions

Ecological validity is not only a concern of design practitioners but also of psychologists doing applied research. However, reading situations in experiments are frequently artificial and do not resemble everyday reading practice. As mentioned in Chapter 2, research has often looked at individual letters or words, rather than reading of continuous text. The letter or word is often displayed for only a short time and the participants in the studies may be required to respond quickly. Context is also removed which means:

-

If testing individual letters, there are no cues from other letters which might help identification. Panel 4.1 provides an example of how the stylistic characteristics of a particular font, or style of handwriting, may help us identify letters.

-

If testing words, there is no sentence context.

Clearly these are not everyday reading conditions, but there are compelling reasons for carrying out a study in this way. These techniques can be necessary to detect quite small differences in how we read because skilled readers can recognise words very quickly (within a fraction of a second). Any differences in legibility need to be teased out by focusing on a part of the reading process and making that process sufficiently difficult to detect change. This is a way of making the measure sensitive (one of the three criteria described above), but at the expense of ecological validity. Although some research does use full sentences and paragraphs, these may not always reveal differences or may be testing different aspects of the reading process.

Designers, in particular, can also be critical of studies measuring speed of reading claiming that how fast we read is not an important issue for them. Speed of reading, or speed of responding to a single letter or word, are also techniques used to detect small differences, and may be used because they are reasonably sensitive measures. It is not the speed itself which is important but what this reveals, e.g. ease of reading or recognition.

Material used in studies

Another criticism relating to artificial conditions in experiments is the poor choice of typographic material, e.g. the typeface or way in which the text is set (spacing, length of line etc.). The objection to such material is that designers would never create material in this form and therefore it is pointless to test; the results will not inform design practice. In some cases, there is no reason for the poor typography of material used in a study, except the researcher’s lack of design knowledge. The researcher may not be aware that it is not typical practice. In other cases, the researcher may need to control the design of the typographic material to ensure that the results are internally valid. If I am interested in the effect of line length I could:

-

Compare two line lengths and also vary the line spacing (see Figure 4.2). Experienced typographic designers increase the space between lines when lines are longer. But if I set the text in this way I cannot be sure if the line length or the spacing, or both, have influenced my results. The line spacing is a confounding variable.

-

Compare two line lengths and not vary the line spacing (see Figure 4.3). But designers will say that they would not create material which looks like this.

In these two examples, there is a conflict between the internal validity, ensuring that the study is planned correctly, and ecological validity. See Panel 4.2 for further detail of experiment design.

Question: Are you convinced by the reasons I have given for using unnatural conditions and test material? If not, what are your concerns?

The data in Figure 4.4 was taken from a huge series of studies in which the experimenters included all combinations of line lengths, line spacing and different type sizes. This scale of testing would not be carried out today as it would not be considered a feasible or efficient approach. Instead, the options would be limited to those shown in Figures 4.2 and 4.3:

-

adjusting the spacing to suit each line length

-

keeping the line spacing constant across all line lengths

Question: If you were asked to advise a researcher who was interested in finding the optimum line length for reading from screen, which of the two options above would you recommend? Why?

Comparing typefaces

An even greater problem arises when more than one type of variation is built into the test material. The classic example is the comparison of a serif and a sans serif typeface. If a difference in reading speed is found this could be due to the presence or absence of serifs but also could be due to other ways in which the two typefaces differ (e.g. contrast between thick and thin strokes). Researchers may be insensitive to the confounding variables (that also change along with the variable of interest) but their existence may invalidate the inferences that can be drawn. If we are less concerned about which stylistic feature of the typeface contributes to legibility and more interested in the overall effect, the results may be valid.

Numerous studies have compared the legibility of different typefaces despite potential difficulties in deciding how to make valid comparisons. As a typeface has various stylistic characteristics, which have been shown to affect legibility, comparisons need to consider:

-

How to equate for size. Although this may seem straightforward to many people, those with typographical knowledge are aware that typefaces appear to be different sizes depending on the height of the ascenders and capitals, the x-height, and the size of the counters (space within letters). Making sure that the typefaces are matched for their x-height, not point size, helps to make them appear similar in size (see Figure 4.5).

-

How to control for differences in weight and width, stroke contrast, and serifs.

Collaborations across disciplines have resulted in experimental modifications of typefaces by type designers (Box 4.1). This approach would appear to provide the ideal solution, but requires a significant contribution from type designers.

Illustrating test material

Graphic designers work with visual material and can be frustrated to find that many studies reported in journals do not illustrate the material used in the studies. Consequently, we are left to figure out what was presented to the participants. This may reflect the researchers giving priority to the results of the study (illustrating data in graphs). However, some printed journals have imposed constraints, due to economic considerations. Many journals now publish online and include interactive versions of articles, which allow for additional supporting material. This has resulted in the inclusion of more illustrations and greater transparency in reporting the methods, materials and procedures used in the study.

Familiarity

Chapter 1 introduced the view, held by some, that legibility results reflect our familiarity with the test material. According to this view, we will find it easier to read something which we have been accustomed to reading. This seems to make a lot of sense as we do improve with practice. However, this also creates a significant challenge for experimenters. How can we test a newly designed typeface against existing typefaces, or propose an unusual layout, without disadvantaging the novel material? More fundamentally, when legibility research confirms existing practices, based on traditional craft knowledge, can we be sure that these practices are optimal? Might they instead be the forms which we are most used to reading? This conundrum was raised by Dirk Wendt in writing about the criteria for judging legibility (Wendt, 1970, p43).

Some research by Beier and Larson (2013), described more fully in Chapter 7, examines familiarity directly, rather than as a confounding variable which causes problems. This research aims to address how we might improve on existing designs, and not be constrained by what we have read in the past.

Methods

The tools used to measure legibility have understandably changed over time, primarily from mechanical to computer-controlled devices. The older methods are summarised in Spencer (1968) and described in more detail in Tinker (1963, 1965) and Zachrisson (1965). Despite the changes in technology, many of the underlying principles have remained the same, but we now use different ways to capture the data. There are two broad categories of methods:

-

objective, measuring behaviour or physical responses

-

subjective, asking readers for opinions

Threshold and related measures

As described in Chapter 1, when reading we first need to be able to experience the sensation of images (letters) on our retina. We also know that we read by identifying letters which we then combine into words (Chapter 2). With this knowledge, it makes sense to measure how easy it is to identify letters or words and we can vary the typographic form (e.g. different typefaces or sizes). One technique used is the threshold method, which aims to measure the first point at which we can detect and identify the letter or word. This might be the greatest distance away or the smallest contrast, or the smallest size of type.

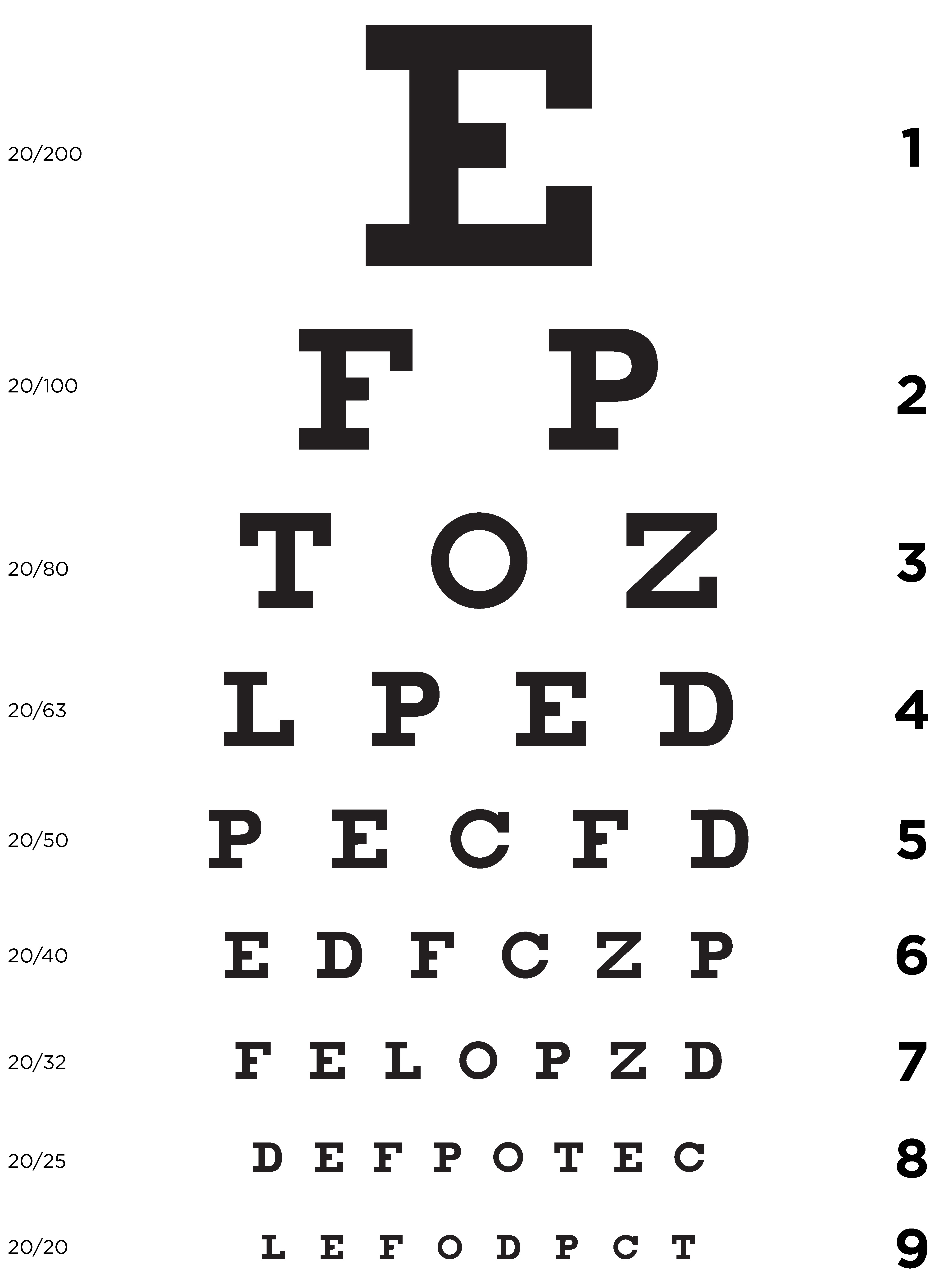

Eye tests are typically carried out in a similar way, obtaining a distance threshold measurement. When having our eyes tested, we may be asked to read from a Snellen chart where the letters decrease in size as we go down the chart (Figure 4.8). We stop at the point when we can no longer decipher the letter and we have reached our threshold. This is letter acuity as the test uses unrelated letters and unconstrained viewing time.

The eye test uses a similar principle to distance thresholds except the size of type is varied, and we remain seated in our chair at the same distance from the chart. The visual angle is changed in both cases as the visual angle depends on size and distance (see Figure 3.2). In the eye test procedure the visual angle decreases until we can no longer read the letters; distance threshold measures work in the opposite direction with increases in visual angle until we are able to identity the image.

Question: Explain why the distance threshold measure needs to start with an image that is too far away to identify and is then moved closer. If you are not sure, read on to find the answer.

The accounts of older methods to test legibility include descriptions of tools which measured thresholds and more general approaches to using thresholds:

-

The visibility meter used filters to vary the contrast between the image and the background. The aim was to identify the smallest contrast that still preserves legibility. This has been used to measure the relative legibility of different typefaces using letters or words.

-

The focal variator used a similar principle to the visibility meter with a blurred image projected onto a ground glass screen and a measurement was made of the distance at which the image becomes recognisable. This device was limited to using letters.

-

A more general method of measuring distance thresholds, which is still in use, is simply to find out how far away something can still be recognised by starting at a great distance and gradually moving the material closer to the participant. The answer to the question above is that it is necessary to do the test in this direction as we cannot accurately report when we can no longer see something because we have already identified it. The method is appropriate for testing signs or other material that would normally be read at a distance but is also applied in other contexts. (See Chapter 5 and Chapter 6)

-

A similar principle is applied when measuring how far out into the periphery an object (e.g. letter) can be placed and still be recognised. Participants are asked to fixate on a specific point, so that they do not move their eyes to focus on the object. Our visual acuity for letters in peripheral vision decreases with eccentricity (i.e. distance from the fovea).

Panel 4.3 describes a sophisticated means of using the threshold to take account of differences among readers.

The short exposure method can be used to measure the threshold (how long is needed to identify a letter or word) or to set a suitable level of difficulty for participants. Before computers were routinely used in experiments, a tachistoscope controlled fixation time by presenting and then removing the image. This is now typically computer-controlled and an example of one form of short exposure presentation is Rapid Serial Visual Presentation (RSVP). Single words are displayed sequentially on screen in the same position which means we don’t need to make eye movements (saccades).

RSVP has been in used in reading research from 1970, but has recently emerged as a practical technique for reading from small screens as the sequential presentation takes up less space. RSVP has also been developed into apps promoted as a technique for increasing reading speed. The value of RSVP as a research method for testing legibility is that the experimenter can adjust the rate of presenting a series of words, which can form sentences. However, as with some of the other techniques above, it is only possible to investigate typographic variables at the letter and word level (e.g. typeface, type variant, type size, letter spacing).

The above methods related to threshold measures typically ask the participant to identify what they see (e.g. a letter or word). These responses either comprise the results (e.g. number of correct responses) or the distance/exposure time/eccentricity is recorded which corresponds to a certain level of correct answers.

Speed and accuracy measures

As mentioned in Chapter 3 and earlier in this chapter, speed of reading is a common way of measuring ease of reading, even though the primary concern of designers may not be to facilitate faster reading. If the letters are difficult to identify, we make more eye fixations (pauses) and pause for longer, which slows down reading; more effort is also likely to be expended.

Measures of speed are often combined with some measure of accuracy. This might be accuracy of:

-

identifying isolated letters or words

-

reading words in sentences and continuous text

-

proofreading

-

remembering (often referred to as recall)

-

understanding (comprehension)

Accuracy can therefore go beyond getting the letters or words correct to measures of recall or comprehension. If letter or word recognition is tested, accuracy may be measured together with exposure time. As we can substitute speed for accuracy when we read, some researchers combine these two measures. If I decide to read very quickly, I am likely to remember and understand less of the text because I am trading off speed and accuracy. If continuous text is read, a test of comprehension is important to check that a certain level of understanding is obtained.

Question: Do you think recall or understanding is more important than speed of reading? Are there any circumstances when speed might be more important?

Measuring legibility by the speed of reading continuous text can be similar to the more usual reading situation. Both silent reading and reading aloud have been used by researchers, though silent reading tends to be more common. If reading aloud, the number of words correctly identified can be measured. Comprehension measures for silent reading include:

-

summaries of what has been read

-

identifying an error in a sentence, which affects the meaning

-

cloze procedure where words are omitted at regular intervals within a text and a suitable word must be inserted into the gap

-

open-ended or short answer questions

-

multiple choice questions

As a researcher, I have made decisions as to which comprehension measure to use. In doing so, I have weighed up the difficulty of preparing the test material with the difficulty of scoring the results. Table 4.1 summarises my assessment of each of the measures in terms of these two considerations. Panel 4.4 explains the reasons for my assessment and some pointers to good practice when carrying out a study.

When comparing results across different texts, with different content, the questions on each text need to be at a similar level of difficulty and answers located in similar regions of the texts. Likewise, when identifying errors, the particular words changed, their position, and how they are changed requires careful attention. Various standardised tests have been developed which address these issues:

-

Nelson-Denny test (1981), originally developed in 1929, is a multiple-choice test.

-

Chapman-Cook Speed of Reading test (1923) has 30 items of 30 words each. In each item there is one word that spoils the meaning and the reader is asked to cross out this word. There is a time limit of 1.75 minutes.

Question: Which is the word that spoils the meaning in the item below?

If father had known I was going swimming he would have forbidden it. He found out after I returned and made me promise never to skate again without telling him.

- Tinker Speed of Reading test (1947) is similar to Chapman-Cook but with 450 items of 30 words each. The time limit is 30 minutes.

Question: Which is the word that spoils the meaning in the item below?

We wanted very much to get some good pictures of the baby, so in order to take some snapshots at the picnic grounds, we packed the stove into the car.

Some authors refer to speed of reading as ‘rate of work’. This more generic term can cover other types of reading such as scanning text for particular words (as you might in a dictionary or if you are looking for a particular paragraph in a printed text), skim reading or filling in a form.

Physiological measures

In the methods described above the measure is the participant’s response, or how fast they respond, or some aspect related to the material (e.g. exposure time, distance from material). Another approach is to take physical measurements of the participants which have included pulse rate, reflex (involuntary) blink rate, and eye movements. These have been described as unconscious processes (Pyke, 1926, p30) which are automatic, whereas we are conscious of threshold, speed, and accuracy measures. An increased pulse rate is supposed to indicate that the participant is working harder. Similarly, an increase in blink rate is assumed to mean that legibility is reduced. However, in both cases, other (confounding) factors may be influencing the measure.

Eye movement measurements, also described as eye tracking, have survived as a technique and now use far more sophisticated technology than the original work around the beginning of the twentieth century (see Chapter 3: Historical perspective). The most widely used current technique records movements by shining a beam of invisible light onto the eye which is reflected back to a sensing device. From this, it is possible to calculate where the person is looking. Typical measurements include:

-

frequency or number of fixations (pauses)

-

duration of fixations

-

number of regressions

The advantage of looking at these individual measures, rather than overall reading speed, is that there may be a trade-off between the number of fixations and their duration. We may make lots of fixations, but for a very short time; conversely we may make few longer fixations. Both may result in the same overall reading time. Regressions indicate a difficulty in identifying letters or words, requiring back-tracking to re-fixate on the relevant part of the text. Another advantage of this technique is that we can measure reading of continuous text in a reasonably natural situation. It is not entirely natural as participants commonly need to wear devices strapped to their head. Eye tracking is also used to explore specific regions of interest (ROI) in advertisements or web pages to see what attracts attention.

Although introduced to measure reader’s emotions, changes in facial expression may also indicate the degree of effort exerted and therefore ease of reading (Larson, Hazlett, Chaparro and Picard, 2006). Facial electromyography (EMG) measures tiny changes in the electrical activity of muscles. The muscle which controls eye smiling, for example, is thought to be more of an unconscious process and may therefore reflect emotion or effort which might not be reported (see Subjective judgements below).

As mentioned above when describing how we read different typefaces (Chapter 2), electroencephalography (EEG) technology has recently been applied in research looking at letter recognition. Although the objectives of this research were not to investigate legibility issues, differences in the level of neural activity were found for low and high legibility typefaces. This method may therefore have potential as a means of measuring brain activity to infer how typographic variables influence legibility.

Subjective judgements

This procedure asks people what they think of different examples of material in relation to a particular criterion. Visual fatigue has been measured in this way, by asking people to rate their fatigue on a scale from no discomfort to extreme discomfort. Mental or perceived workload has also been assessed using the NASA Task Load Index (NASA-TLX). As these estimates can be influenced by other factors, a more reliable measure is to test visual fatigue objectively (as a physiological measurement). This has been done using equipment which can simultaneously measure pupillary change, focal accommodation, and eye movements.

A common way of employing subjective judgements in a study is to ask participants which material they think is easiest to read, or which they prefer. These judgements are quite often combined with other methods, such as speed and accuracy of reading. The procedure can vary from asking the participant to rank or rate a number of alternatives to asking them to make comparisons of pairs. (Panel 4.5)

Summary

Having a range of methods to test legibility can be viewed as positive, as they may have different applications, or may be combined within the same study. However, concerns have been raised as to whether studies of single letters or words can tell us anything about everyday reading. It may be tempting to dismiss results from threshold measures of individual characters but we should remember that reading starts with identifying individual characters. If individual characters cannot easily be identified, there is likely to be a problem in reading. Also, it is frequently easier to find differences when using threshold measurements, than when using measures which are closer to the everyday reading process. It is rather pointless to argue for using a method which will probably not be sufficiently sensitive to detect differences in legibility, assuming they exist. Also, it is not feasible to study the complete natural reading experience which will be influenced by numerous variables.

We do, however, need to be aware of the limitations of methods which do not involve reading continuous text. By showing letters or words individually, the reading environment is changed and the effects of many typographic variables cannot be assessed. We are unable to test the effects of changes to word spacing, line length, line spacing, number of columns, alignment, margins, and headings. If we wish to investigate these aspects of typography, we will probably need to more closely approximate natural reading conditions.

The objectives of the study will also guide the choice of method. We should make a clear distinction between testing alternatives as part of the design process and research studies which are intended to inform researchers and designers. In evaluating the value, appropriateness, validity and reliability of any study, the context will determine how and what we measure.